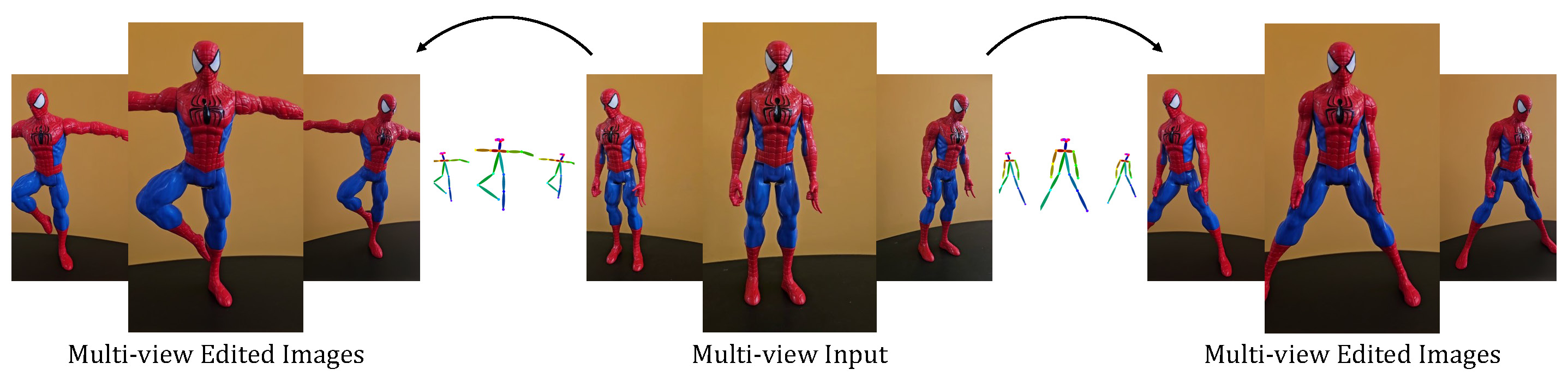

Given a multi-view image set of a static scene, we apply a geometric edit of the images,

ensuring consistency between the different views.

To do so, we use a diffusion model to edit the images, and consolidate the attention features of the generated images

along the denoising process, making them consistent.

The consolidation is done by training Neural Radiance Field on the features, and then inject rendered consistent

features to the diffusion model.

In the above video we show NeRFs trained on our edited images.

Large-scale text-to-image models enable a wide range of image editing techniques, using text prompts or even spatial controls. However, applying these editing methods to multi-view images depicting a single scene leads to 3D-inconsistent results. In this work, we focus on spatial control-based geometric manipulations and introduce a method to consolidate the editing process across various views. We build on two insights: (1) maintaining consistent features throughout the generative process helps attain consistency in multi-view editing, and (2) the queries in self-attention layers significantly influence the image structure. Hence, we propose to improve the geometric consistency of the edited images by enforcing the consistency of the queries. To do so, we introduce QNeRF, a neural radiance field trained on the internal query features of the edited images. Once trained, QNeRF can render 3D-consistent queries, which are then softly injected back into the self-attention layers during generation, greatly improving multi-view consistency. We refine the process through a progressive, iterative method that better consolidates queries across the diffusion timesteps. We compare our method to a range of existing techniques and demonstrate that it can achieve better multi-view consistency and higher fidelity to the input scene. These advantages allow us to train NeRFs with fewer visual artifacts, that are better aligned with the target geometry.

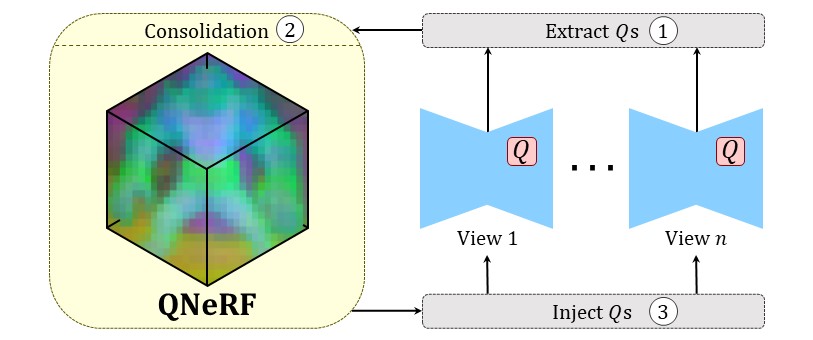

We simultaneously generate multi-view edited images with a diffusion model. To consolidate the images, along the denoising process we (1) extract self-attention queries from the network, (2) train a NeRF (termed QNeRF) on the extracted queries and render consolidated queries, and (3) softly inject the rendered queries back to the network for each view. We repeat these steps throughout the denoising process.

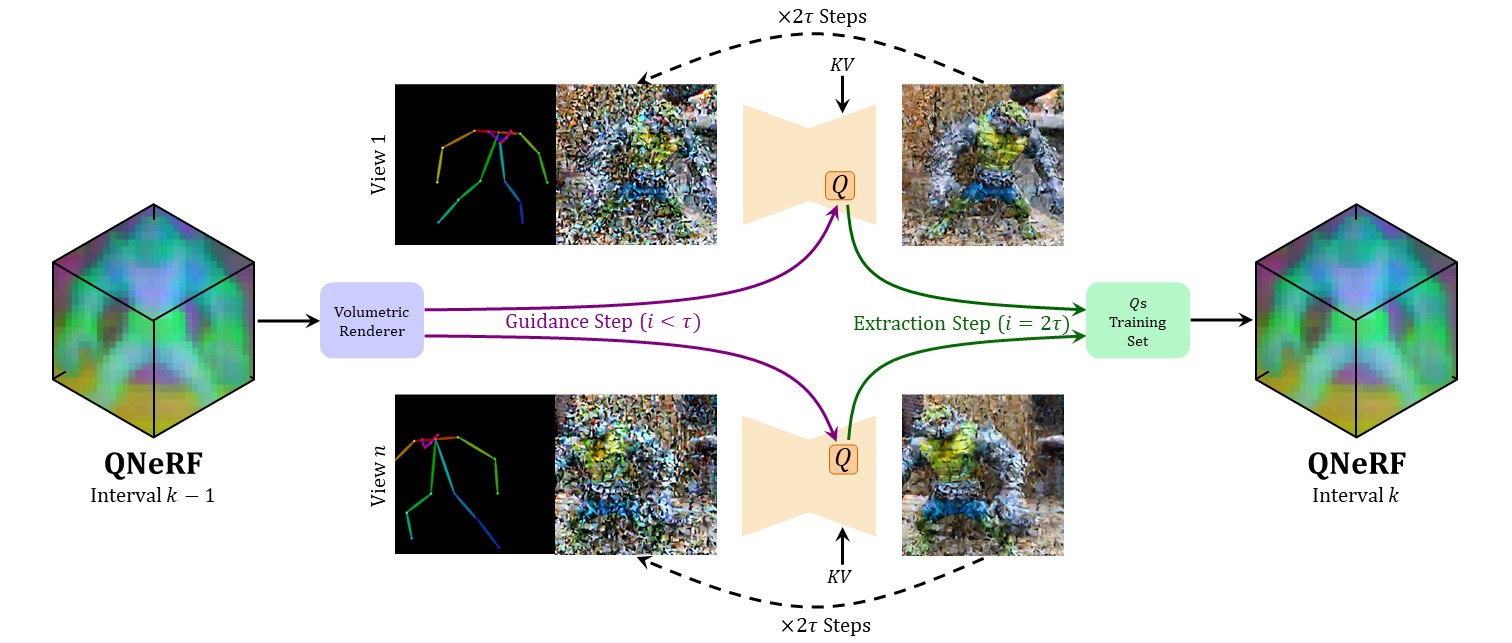

In practice, we perform the denoising process in intervals. In each interval, we interleave consolidation steps with steps that allow the features to evolve. At the last step of each inverval we extract self-attention queries and train a QNeRF on them. Rendered queries of this QNeRF are injected in the consolidation steps of the next interval.

In each multi-view denoising interval we have query-guided steps, followed by steps without guidance. In query-guided steps, we alter the noisy latent code with an objective of proximity between the self-attention queries generated by the latent code, and queries rendered from the QNeRF. At the last step of the interval, we extract the generated queries and use them to train the QNeRF that provides guidance for the next interval. Query guidance consolidates the geometry across the different views. In addition, we inject the keys and values of self-attention layers from the original images to preserve the appearance.

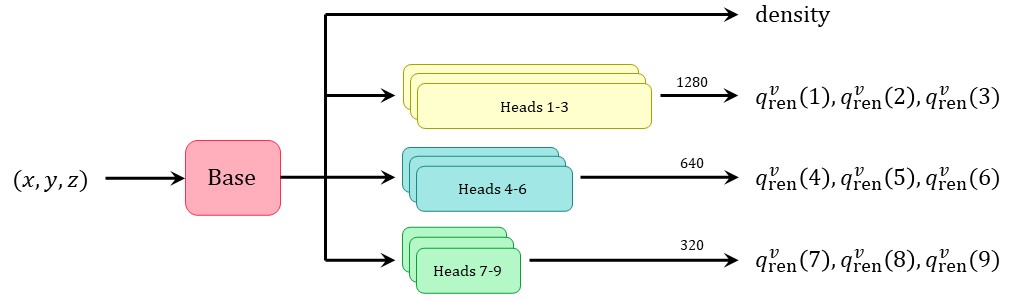

The centerpiece of our approach is the QNeRF - a Neural Radiance Field trained on query features extracted from the diffusion model during the denoising process. The inherent 3D consistency of the QNeRF drives the consolidation of the queries.

The architecture of the QNeRF is illustrated above. Nine heads are attached to the base network, to produce queries corresponding to nine self-attention layers of the diffusion model. Each group of heads corresponds to a self-attention layer of a certain resolution, and the number displayed above the arrow represents the number of channels in that group (1280, 640, 320).

In the above videos we demonstrate the effect of the QNeRF. On the left of the three videos we show the extracted queries from the diffusion model, on which a QNeRF is trained. Then, on the right we show the rendered queries from the corresponding QNeRF. The QNeRF consolidates the queries as can be seen by the more consistent right part of each of the videos. From left to right, the queries are of resolutions 16, 32, 64.

As can be seen, existing approaches for multi-view editing, such as those using flows (TokenFlow) or iterative dataset updates for pixel-space NeRFs (IN2N and IN2N-CSD), often implicitly assume that we do not change the underlying geometry. Violating this assumption leads to visual artifacts, like duplicated and translucent limbs, or just lower quality.